Introduction

Apache NiFi is a powerful tool to automate dataflow between systems. It provides web-based easy-to-use interfaces to perform data transformation and transportation between common data sources. Extracting and loading data are implemented through standard application interfaces such as JDBC. These features make the migration of data from other databases to Mimer SQL a simple task using NiFi. This article presents an example of migrating data from PostgreSQL to Mimer SQL, but the process can be easily applied to other databases such as Oracle, MS SQLServer and MySQL.

Preparation before migration

Source database preparations

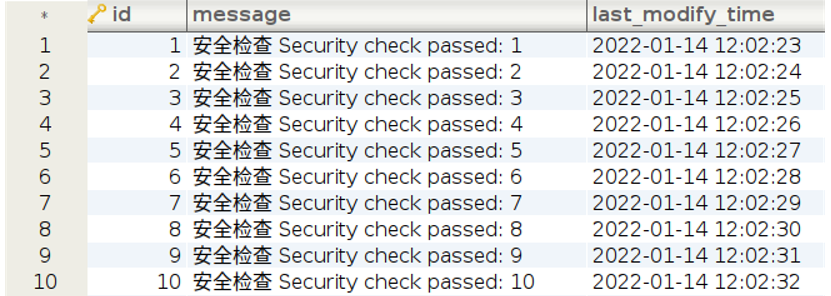

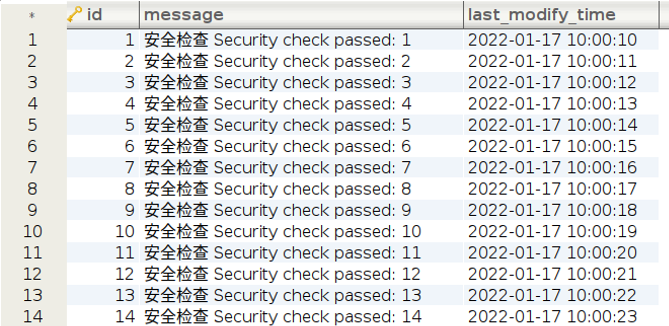

The data source can be any relational or non-relational databases. In this example, the data source is a table called log_data in a PostgreSQL database called test_db. The table contains 1 million rows of data with log messages and timestamps, as shown in Fig. 1.

Fig. 1 Data in the source PostgreSQL table

Destination database preparations

The destination is a Mimer SQL database, in this example, a database called tempdb. Before migration, we create the table log_data for the data to be migrated.

CREATE TABLE LOG_DATA ( ID INTEGER PRIMARY KEY, MESSAGE NATIONAL CHARACTER VARYING(400), LAST_MODIFY_TIME TIMESTAMP(6) );

NiFi preparations

Download NiFi binary, or build it from its source, from Apache Nifi. After starting it, one can navigate to https://localhost:8443/nifi from the web browser. This example uses version 1.15.

Data migration using NiFi

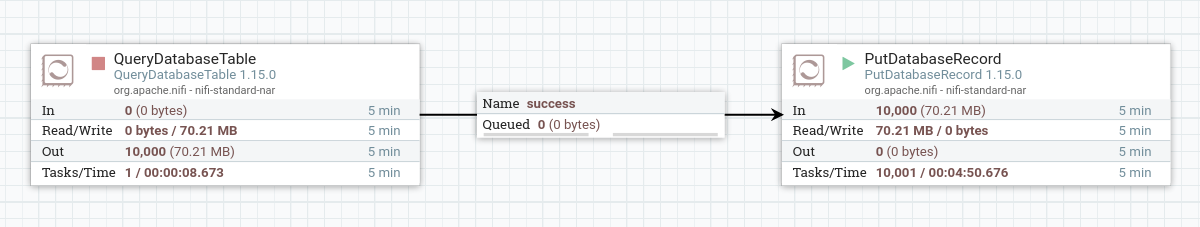

The migration of data in NiFi is performed through a pipeline consisting of a series of NiFi “processors”, each executing a step in the migration process: extracting data from the source, various transformations, loading data into the destination, etc. Fig. 2 presents a pipeline used in this example that migrates data from the table log_data in the PostgreSQL database into Mimer SQL.

Fig. 2 A NiFi pipeline to migrate data from PostgreSQL to Mimer SQL

The processors in the pipeline

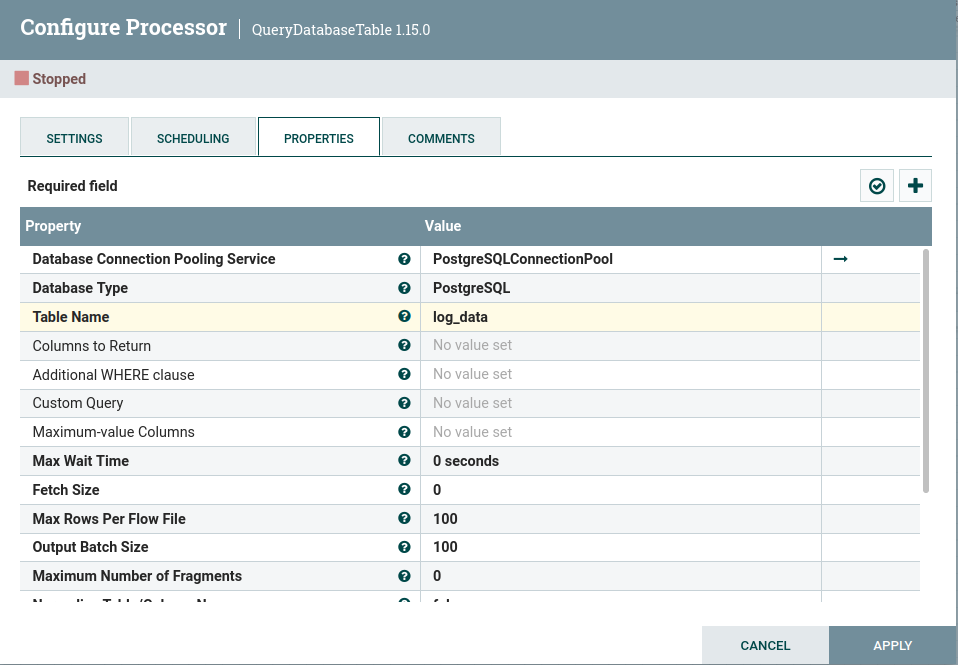

QueryDatabaseTable processor

This processor reads data from the PostgreSQL database. Its configuration is shown in Fig. 3. One can also specify the columns, the WHERE clause, or even a custom query to filter the fetched data. Here we only specify the table name, so that the processor simply reads all rows and columns from log_data. The extracted data are organized as internal flow files and are grouped as batched, which are also configurable.

Fig. 3 Configuration of QueryDatabaseTable

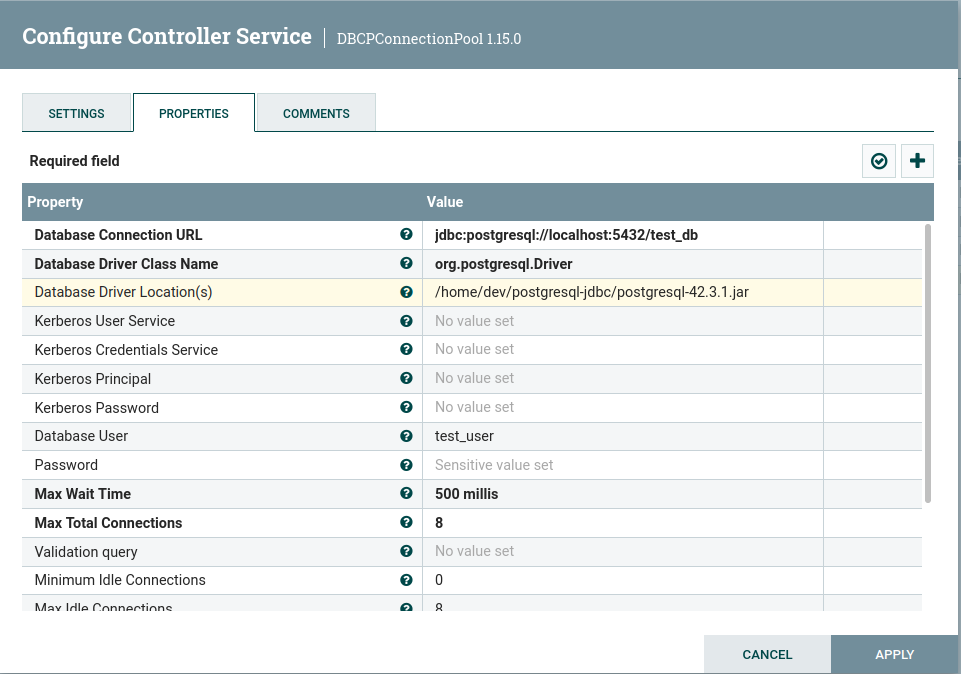

The database connection is configured in the “Database Connection Pooling Service” in the processor. Fig. 4 shows the controller service for the PostgreSQL database. One needs to specify the PostgresSQL JDBC connection URL, driver class name, and the location of the driver. The user and password for database access is also configured in this service. One may also specify the maximal allowed concurrent connections.

Fig. 4 Configuration of PostgreSQL connection pooling service

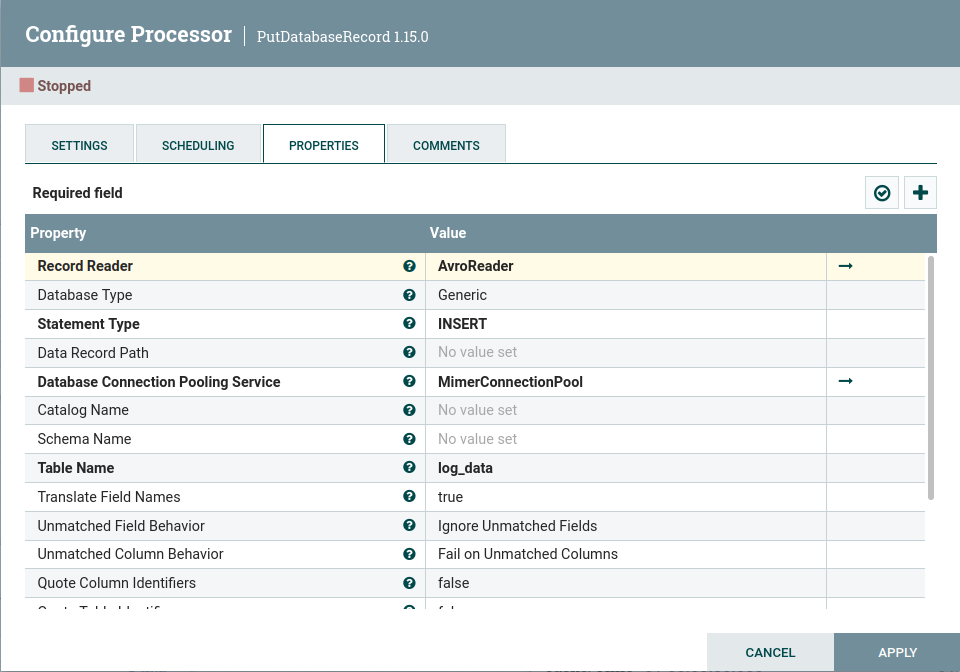

PutDatabaseRecord processor

This processor manipulates data in a table, and we use it to load data into the destination database. Fig. 5 shows its configuration. The “Record Reader” is AvroReader which reads the NiFi internal data file passed from the previous processor. We choose the “Generic” database type, which is suitable for databases that complies to the ASNI SQL standard. The statement type is INSERT, and the table name is log_data.

Fig. 5 Configuration of PutDatabaseRecord

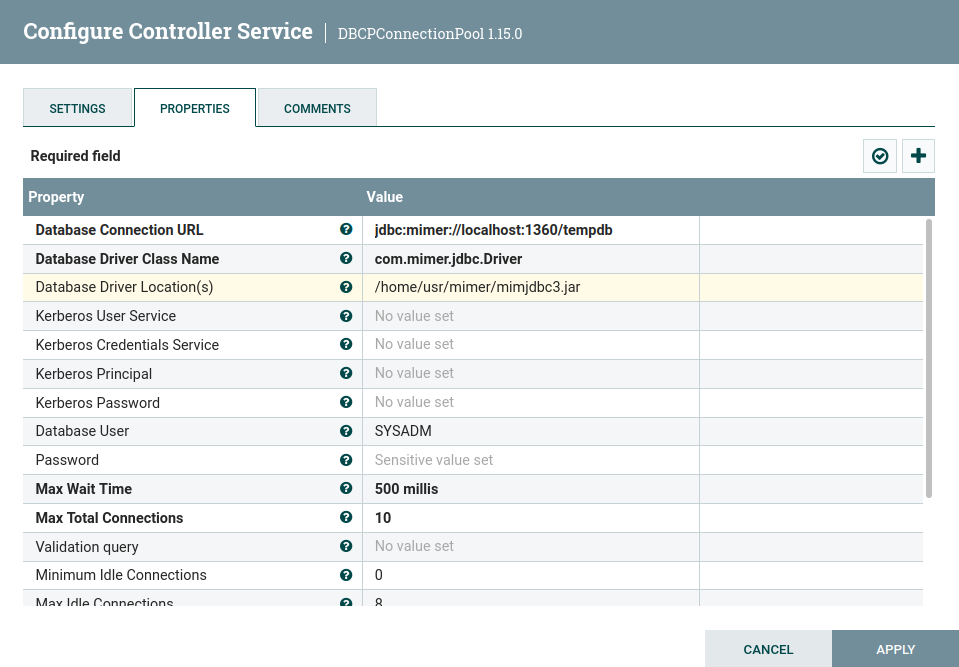

The database connection pooling service for Mimer SQL is shown in Fig. 6. Here one specifies the Mimer JDBC URL, driver class name, driver location, database user and password.

Fig. 6 Configuration of Mimer SQL connection pooling service

Migration result

When the pipeline is configured as above, one can start the pipeline, which automatically migrates data from the specified PostgreSQL table to the Mimer SQL table (Fig. 7).

Fig. 7 Migrated data in the destination Mimer SQL log_data table

Remarks

There are several other processors in NiFi that are useful for database migration. For example:

- ExecuteSQL and ExecuteSQLRecord: Execute a SQL select query.

- PutSQL: Execute a SQL update or insert command.

- GenerateTableFetch: Generate SQL select queries that fetch “pages” of rows from a table.

- ListDatabaseTables: Generate a set of flow files, each containing the metadata about a table.

They can be combined with other transformation processors for more complex data transformation tasks. More information about different processors in NiFi documentation: https://nifi.apache.org/docs.html.